My work on detecting ambiguities in Internet Protocol specifications, RFCScope: Detecting Logical Ambiguities in Internet Protocol Specifications, has been accepted to appear at the ASE 2025 conference in the Research Papers track. This work is from my 6th semester1 when I was working with Prof. Wenxi Wang from the University of Virginia, along with Lize Shao, who is now a PhD student with Prof. Wenxi; Prof. Yixin Sun from the University of Virginia; and Hyeonmin Lee, who is a postdoctoral researcher working with Prof. Yixin.

Our work presents the first systematic study of technical errata reported in Internet Protocol specifications. These specifications are published by the Internet Engineering Task Force (IETF) as RFCs (Request for Comments), which are natural language documents written by experts in the field. RFCs are widely used as authoritative references for implementing Internet protocols, and ambiguities in these documents can lead to incorrect software implementations. Each RFC goes through a long drafting and review process before it is published, where many experts scrutinize the document over several iterations — and yet it so happens that the final published RFCs contain ambiguities. While many of these may not be a problem for domain experts, they can be a significant hurdle for people2 writing software based on these documents. RFCs, once published, are immutable, but the IETF provides a mechanism to report errata to keep track of any issues found in the documents. We analyzed 273 verified technical errata reported in RFCs published over the last 11 years and classified them into 7 categories spanning inconsistencies and underspecifications.

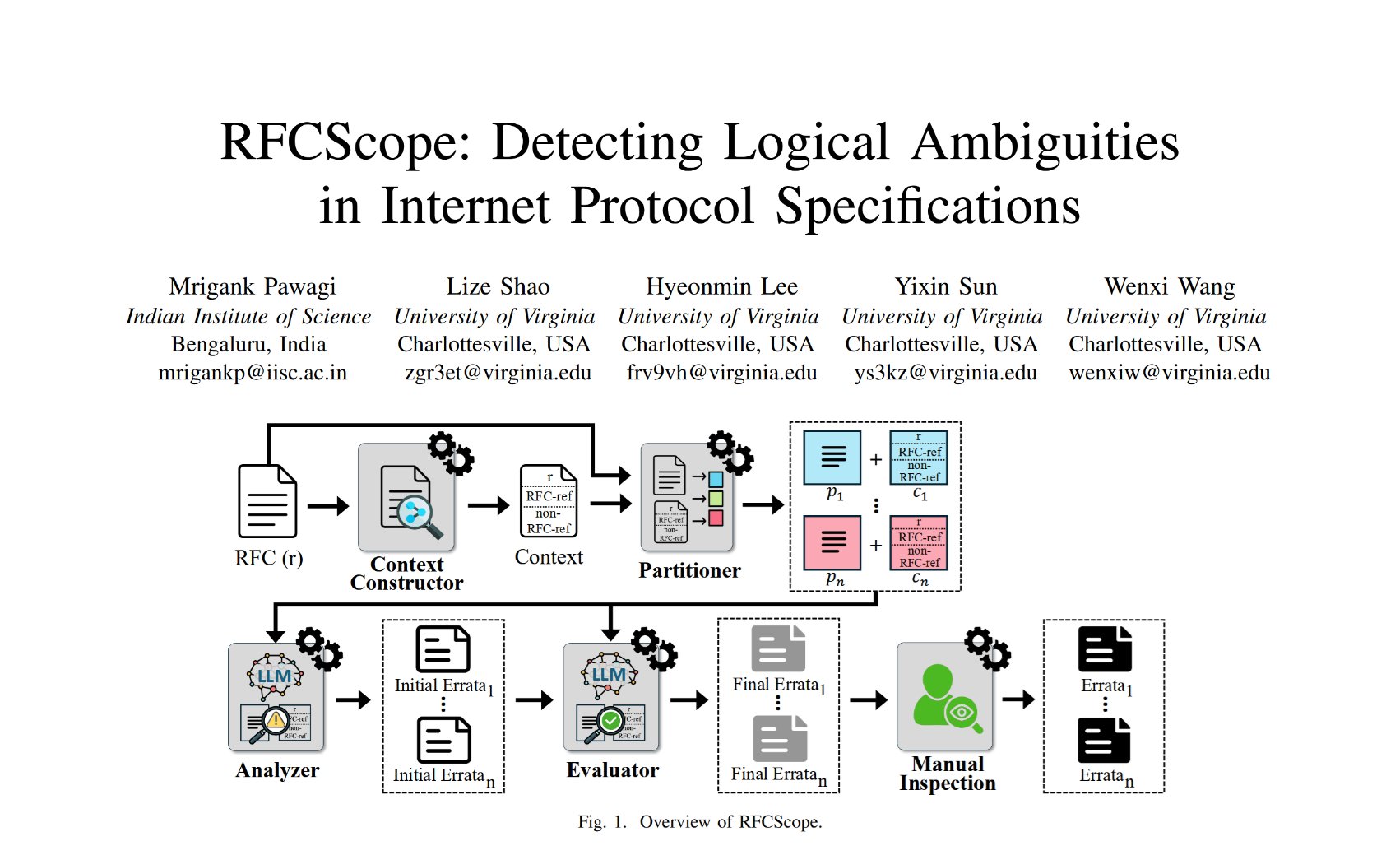

Drawing from our analysis, we developed an LLM-based system, RFCScope, to automatically detect such ambiguities in RFCs. Our system gathers cross-document context and slices the RFCs into smaller chunks to make them easier for LLMs to process, before prompting the LLM with prompts based on our taxonomy to identify ambiguities. Our system also self-evaluates its findings, removing a significant number of false positives while rarely missing out on true issues. We evaluated our system on the 20 latest RFCs related to Domain Name System (DNS) and found 31 previously unreported ambiguities in 14 of them. We were able to confirm 8 of these issues with the authors of the respective RFCs, and 3 of these have been accepted as official errata3.

Our data and tool are available on GitHub.

Our data and tool are available on GitHub.It was a pleasure working with my collaborators on this project. Lize helped a lot with the manual analysis of errata for both our taxonomy and evaluation, and also supported the literature review for our paper. Prof. Yixin and Hyeonmin were very helpful in providing relevant insights from the networking domain, and Prof. Yixin also made it possible for us to get in touch with the authors of several RFCs to validate our findings. Hyeonmin also created beautiful illustrations for our paper, like the one in the image above. And of course, Prof. Wenxi was a wonderful mentor throughout the project, providing me with a lot of autonomy while also always being available for discussions and feedback. I am very happy to be a part of the first paper from her group and look forward to more collaborations in the future.

Thrilled to share that our RFCScope paper, the very first paper authored by my intern and PhD student under my supervision, has been accepted to ASE 2025! I’m so proud of their hard work and excited to see it presented at ASE. pic.twitter.com/8bQ5WfQqm1

— Wenxi Wang (@WenxiWang4) September 26, 2025

-

I only had a few courses to take that semester and nothing to do for my upcoming summer internship at Microsoft Research, so I was very happy when Prof. Wenxi offered me this opportunity. ↩

-

Or lately, large language models (LLMs) that are being increasingly used to automatically generate code based on natural language specifications. ↩